Introduction

MCP is an open protocol that allows applications to send context to large language models (LLMs). You can think of MCP as a USB-C port for AI. Just like USB-C gives a standard way to connect your phone or laptop to chargers, displays, or other devices, MCP gives a standard way for AI models to connect to different data sources, tools, and apps. This makes it easier for developers to build robust and flexible AI applications.

It is a new way to help AI models better understand the context in which they need to work. Think of it as giving the model a clear instruction manual that includes background information, rules, goals, and memory in one place. This helps the models give more accurate, relevant, and helpful responses. Whether you’re building a chatbot, a search assistant, or a decision-making tool, MCP gives you more control over how the model behaves and what it knows. It was open sourced by Anthropic.

What Can MCPs Do?

- Resources – Expose data and content from your server to LLMs

- Prompts – Create reusable prompt templates and workflow

- Tools – Enable LLMs to perform actions through your server

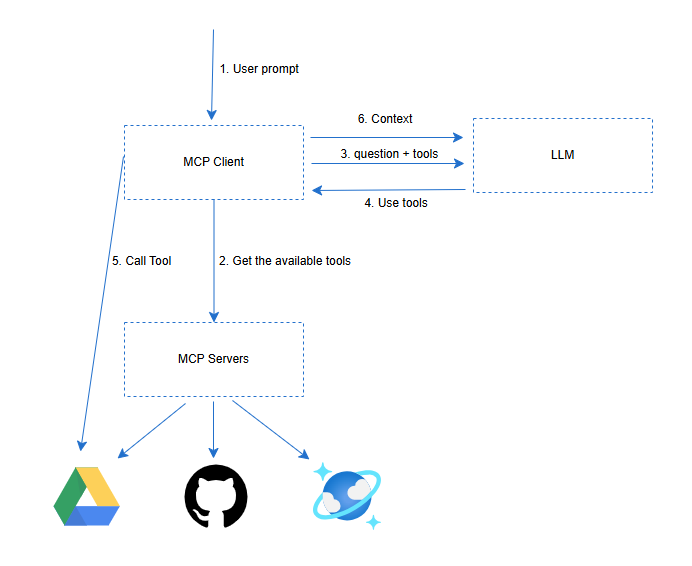

To understand the Model Context Protocol (MCP), let’s break down the flow illustrated in the diagram. Imagine a user interacting with an AI-powered application—this user initiates the process with a simple input (Step 1: “User input –> do xyz”). This request is picked up by the MCP Client, such as a development environment like Cursor, which is MCP-aware.

The MCP Client then contacts the MCP Servers (Step 2: “Brother, what tools do you have?”), asking what tools are available to process this request. These tools can include integrations like Slack, a PostgreSQL database, or Google Drive.

Next, the MCP Client communicates with the LLM (Large Language Model), sending the user’s query and the list of available tools (Step 3). The LLM determines which tools are needed and instructs the MCP Client to use specific ones (Step 4: “Use tools xyz”).

With instructions from the LLM, the MCP Client calls the selected tools via the MCP Servers (Step 5), executing the appropriate operations like querying a database, posting to Slack, or fetching a file from Google Drive. Finally, the context or results are returned to the LLM (Step 6), enabling it to generate an informed and complete response for the user.

This architecture abstracts tool orchestration behind a clean interface, allowing developers to build intelligent systems where LLMs and real-world tools can collaborate seamlessly.

MCP Servers are, in most cases, hosted locally due to security issues

Soon, we might see managed MCP hosting

Getting started with MCP using Claude for Windows

Download Claude for Windows

After the installation, go to File->Settings -> Developer and click Edit Config, which will open the configuration file.

Add the code below

{

"mcpServers": {

"fetch": {

"command": "uvx",

"args": ["mcp-server-fetch"]

}

}

}

- mcpServers – This section defines custom MCP server entries. These servers handle specialized model contexts, such as structured data, file browsing, code execution, or other AI-agent-style features.

- Fetch – This defines one MCP server named fetch. The name (fetch) is used internally by Claude when it wants to route a request to this specific server.

- “command”: “uvx” –

- This tells Claude what command to execute to start or interact with this MCP server.

- uvx is a Deno-compatible runtime that supports web-standard APIs, often used to run scripts locally in Claude extensions.

“args”: [“mcp-server-fetch”]

- These are the arguments passed to uvx.

- It means UVX will run a built-in or locally installed module named mcp-server-fetch.

- This module implements the actual server logic—in this case, likely for fetching documents or content based on Claude’s query.

This configuration enables Claude Desktop to run a local server (called fetch) using the uvx runtime. When Claude needs to perform a “fetch” task (like retrieving documents from local files or browsing structured content), it sends the task to this MCP server.

Key Purpose

- mcpServers – Register local agents who assist Claude with specific tasks.

- fetch – The name of the agent (used by Claude internally)

- command – The executable to invoke (uvx runtime)

- args – The script/module to run (mcp-server-fetch)

Note: After the configuration changes, restart the Claude application

Testing:

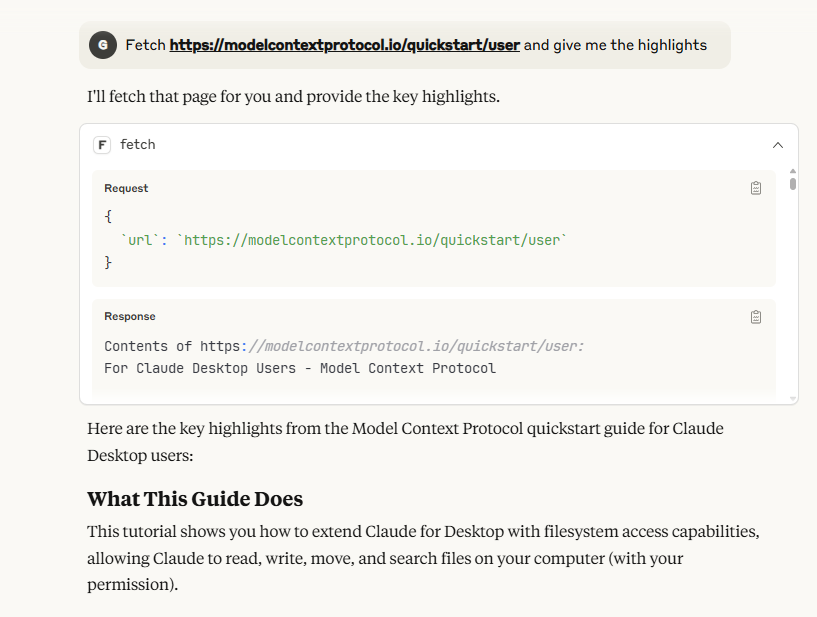

Now you can find the search and tools icon in the prompt field, use the prompt “Fetch https://modelcontextprotocol.io/quickstart/user and give me the highlights”. At first, it will check your permission. Once you allow it, it should provide you with the proper response

Risks in MCP:

When you provide more capabilities to the language model to do something on your computer, it may do things you didn’t intend and may even do things you never intended. So, when you’re building MCP servers, always apply the principle of least privilege so it only accesses data it should be able to access and explicitly limits access to anything else.